Links

- https://www.reddit.com/r/MachineLearning/

- IA TEAM OF LIP6

- http://www.cse.ohio-state.edu/mlss09/%0D

- http://videolectures.net/

- http://iris.usc.edu/Vision-Notes/bibliography/applicat802.html

- http://simsearch.yury.name/tutorial.html

- http://similar-images.googlelabs.com

- http://icos-hd.irisa.fr/demo/index.php

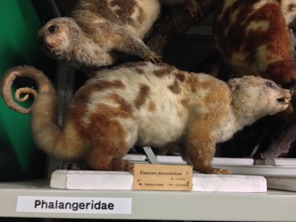

Amazing Sorbonne Univ collection (MNHN)

Techno News

- http://www.plinkart.com/

- http://www.google.com/mobile/goggles/#landmark

- http://news.bbc.co.uk/2/hi/technology/8193951.stm

- http://www.technologyreview.com/web/23213/?nlid=2268

- http://www.arhv.lhivic.org/

- http://www.28millimetres.com/women/

- http://www.lesechos.fr/info/innovation/4637607.htm%0A

Conferences

ACM International Conference on Multimedia Information Retrieval http://www.mir2008.org/

2008 IEEE International Workshop on MACHINE LEARNING FOR SIGNAL PROCESSING http://mlsp2008.conwiz.dk/

Old News

2009

- Dec09: IUF nomination

PhD Def of M. Hanif, E. Aldea - Nov09: ASAP new ANR project on deep learning

3 papers presented in Egypt ICIP 09

PhD Def. of Corina Iovan

GdR ISIS workshop organisation on scalability and cross-media - Oct09: Organize Digital Video event in Sibgrapi09, Brazil

- Sept09: 2 papers in ISPRS workshop CMRT

Phd Def. of A. Auclair, MI. Akodjènou, J. Forest - March09: ITOWNS presentation in DAPA journey

- Feb09: 1 week invited at I2R Institute in Singapore

- Several Participations:

- Program committee of CMRT09

- Program committee of SinFra’09

- Technical program committee of The 15th International MultiMedia Modeling Conference (MMM2009) http://mmm2009.eurecom.fr/

- Program committee of CBMI 2009

- Program committee of the International Conference on Imaging Theory and Applications 2009 http://www.imagapp.org//cfp.htm

- Ad hoc reviewer for NSF, program 2009, USA

- Committee CR2/CR1 of l’INRIA Rocquencourt 2009

- Reference expert for Agropolis fondation, 2009

2008

- Dec08: seminar at LEAR lab.

- Presentation revue ANR

- Paper in ICPR 08 on fast LSH kernels

- Paper in ICIP 08 on distributed CBIR

- Paper in CIKM 08 on high-dim indexing

- Paper in TrecVid workshop TVS 08

- PhD Def. of Diane Larlus Nov 28, LEAR lab, INRIA Grenoble

- PhD Def. of David Picard Dec 5, ETIS lab

Some PhD thesis where I participated to the jury (or scheduled):

[2008] Nicolas Burrus

Apprentissage a contrario et architecture efficace pour la détection d’évènements visuels significatifs

[2008] Diane Larlus

Création et utilisation de vocabulaires visuels pour la catégorisation d’images et la segmentation de classes d’objets

Abstract:

This thesis deals with the interpretation of static images, with a focus on recognizing object categories. We consider several different approaches, which are all variations on the bag-of-words model, and all use local image descriptors. The first part of the thesis examines different methods for creating visual vocabularies. We aim to create vocabularies which perform well for image categorization. The first method proposed uses dense image representations. Feature descriptors are extracted and then quantized to visual words, using a two-stage clustering algorithm. We provide a full quantitative evaluation of the method. The second method we propose for creating visual vocabularies integrates the vocabulary into an image representation model. This generative model uses the image class labels via latent variables describing object aspect. Training the model leads to the creation of a compact and discriminative set of visual words. Next we show that traditional visual vocabularies (like the ones used above) can be replaced by random decision trees. Each tree provides a quantization of the space of descriptor representations into visual words. Since the trees are constructed using the image class labels, they have good classification performance. Each node uses a simple classifier, so processing of test images is fast. The random trees are also used for online learning of saliency maps which guide the process of descriptor sampling. The second part of the thesis deals with object category segmentation. We first present a method which uses an extended latent aspect based model. Instead of considering aspects at the image level, the method models them at the sub-region level. These semi-global regions can overlap and share information, allowing the local predictions to be improved. The local classifications are based on visual word statistics. The second segmentation method combines the low-level consistency properties of a Markov Random Field with an appearance model which provides higher-level constraints. The appearance model is based on regions which each represent a single object as a set of visual words. We also evaluate using decision trees instead of visual words. Finally, the method is applied to a real-world visual search problem for a humanoid robot. The method is used to generate hypotheses about the position of an object in the robot’s eld of view.

[2008] Karim Yousfi

Segmentation hiérarchique optimale par injection d’a priori radiométrique, géométrique ou spatial

[2008] Trang Vu

Apprentissage d’ordonnancements pour la constitution de Corpus d’evaluation et pour l’Agregation de listes en Recherche d’Information

[2008] Anne-Lise Chesnel

Damage assessment on buildings due to major disasters using very high resolution satellite multimodal images

[2008] Anastasia Krithara

Learning Aspect Models with Partially Labeled Data

Abstract:

Machine learning techniques have been used for various information access tasks, such as categorization, clustering or information extraction. Acquiring the annotated data necessary to apply supervised learning techniques is a major challenge for these applications, especially in very large collections. Annotating the data usually requires humans who can read and understand them, and is therefore very costly, especially in technical domains. Over the last years, two main approaches have been explored towards this direction, namely semi-supervised (SSL) and active learning. Both paradigms address the issue of annotation cost, but from two different perspectives. On the one hand, semi-supervised learning tries to learn by taking into account both labeled and unlabeled data. On the other hand, active learning tries to find the most informative examples to label, in order to minimize the number of labeled examples necessary for learning.

Either methods try to reduce the human labeling effort.

In this thesis, we address the problem of reducing this annotation burden. In particular, we investigate extensions of aspect models for the classification task, where the training set is partially labeled. We propose two semi-supervised PLSA algorithms, which incorporate a mislabeling error model. We then combine these semi-supervised algorithms with two active learning algorithms. Our models are developed as extensions of the classification system previously developed in Xerox Research Centre Europe. We evaluate the proposed models in three well-known datasets and in one coming from a Business Group of Xerox.

[2008] Eric Galmar

Representation and Analysis of Video Content for Automatic Object Extraction

Abstract:

The recent explosion of multimedia applications has called for an increasing demand of advanced search and indexing of multimedia information. Among them, digital video content is certainly one of the most complex to analyze and represent. From this point of view, video objects are considered as essential elements for handling video contents, as they provide accurate and flexible representation for numerous applications such as semantic content analysis or video coding.

Automated object extraction from videos is a difficult task that has been widely addressed in the past years in the context of MPEG-4 video coding. Methods developed so far mostly rely on motion estimation to define the object model and adapt this models frame to frame. However there is an agreement that robustness of motion and the accuracy of the support are dependent to each other. In this thesis, we first introduce a framework for video object modeling based on a spatiotemporal representation with graphs. The model describes both the internal structure of object regions and their spatiotemporal relationships inside the shot. This approach is fully supported by the MPEG-7 multimedia standard, where the information is structured hierarchically in scenes, shots, objects and regions. As the next step, we propose a 2D+T scheme for the extraction of spatiotemporal volumes. The method we developed uses local and global properties of the volumes to propagate them coherently in space and time. At this point we investigate grouping of spatiotemporal regions into complex objects using motion models. To address the difficulty of building motion models, we propose a method to propagate and match moving objects to areas where motion information is less relevant. In a third step, we investigate the benefit of semantic knowledge for spatiotemporal segmentation and labeling of video shots. For this purpose we extend a knowledge-based system providing fuzzy semantic labeling of image regions to video shots. The shot is split into smaller block units and, for each block, volumes are sampled temporally into frame regions that receive semantic labels. The semantic labels are then propagated within volumes and a consistent labeling of the shot is finally obtained by joint propagation and re-estimation of the semantic labels between the temporal segments. Finally, we explore the capabilities of the representation for indexing and retrieval tasks. We first consider the context of a region-based indexing framework called the Vector Space Model. We present a study of the model properties and show that the spatiotemporal representation gives more robustness to the visual signatures compared to the traditional keyframe representation. This dissertation concludes by proposing a strategy to compare efficiently object graphs. To this aim we introduce a similarity measure between graphs that we further use to search for a given object.

[2008] Lech Szumilas

Scale and Rotation Invariant Shape Matching

Abstract:

Recognition of objects from images is one of the central research topics of computer vision.

The use of shape for recognizing objects has been actively studied since the beginning of object recognition in 1950s. Several authors suggest that object shape is more informative than its appearance – the object appearance properties such as texture and color vary between object instances more than the shape e.g. bottle, caps, cars, airplanes, cows, horses etc. Recent methods are concentrated on extracting shape features and learning the object models directly from images which impose such problems as object occlusion,

incomplete and often fragmented object boundaries, varying camera view-points. While these approaches are designed to learn object models from fragmented and incomplete object boundaries, achieving invariance to rotation, scale and affine transformations has not been fully solved.

This thesis address the problem of learning object models that use shape properties with full rotational and scale invariance. A new approach is proposed where invariance to image transformations is obtained through invariant matching rather than typical invariant features. This philosophy is especially applicable to shape features, represented by edges detected in images which do not have a specific scale or specific orientation until assembled into an object. Our primary contributions are: a new shape-based image descriptor that encodes a spatial configuration of edge parts, a technique for matching descriptors that is rotation and scale invariant and shape clustering that can extract frequently appearing image structures from training images without a supervision. This thesis also presents an overview of the object recognition field and our other contributions in the area of local appearance based methods, texture detection and image segmentation.

Keywords: object recognition, shape, image descriptors, interest points.

[2008] Avik Bhattacharya

Indexing of satellite images using structural information

Abstract:

From the advent of human civilization on our planet to modern urbanization, road networks have not only provided a means for transportation of logistics but have also helped us to cross cultural boundaries. The properties of road networks vary considerably from one geographical environment to another. The networks pertaining in a satellite image can therefore be used to classify and retrieve such environments. In this work, we have defined several such environments, and classified them using geometrical and topological features computed from the road networks occurring in them. Due to certain limitations of these extraction methods there was a relative failure of network extraction in some urban regions containing narrow and dense road structures. This loss of information was circumvented by segmenting the urban regions and computing a second set of geometrical and topological features from them […].

Keywords : Satellite images, road networks, urban regions, classication, indexing.

[2007] Thomas Retornaz

Automatic detection of text from natural scenes. A semntic descriptor for content based image retrieval

Abstract:

Multimedia data bases, both personal and professional, are continuously growing and the need for automatic solutions becomes mandatory. Effort devoted by the research community to content-based image indexing is also growing, but the semantic gap is difficult to cross: the low level descriptors used for indexing are not efficient enough for an ergonomic manipulation of big and generic image data bases. The text present in a scene is usually linked to image semantic context and constitutes a relevant descriptor for content-based image indexing.

In this thesis we present an approach to automatic detection of text from natural scenes, which tends to handle the text in different sizes, orientations, and backgrounds. The system uses a non linear scale space based on the ultimate opening operator (a morphological numerical residue). In a first step, we study the action of this operator on real images, and propose solutions to overcome these intrinsic limitations. In a second step, the operator is used in a text detection framework which contains additionally various tools of text categorisation.

The robustness of our approach is proven on two different dataset. First we took part to ImagEval evaluation campaign and our approach was ranked first in the text localisation contest. Second, we produced result (using the same framework) on the free ICDAR dataset, the results obtained are comparable with those of the state of the art. Lastly, a demonstrator was carried out for EADS. Because of confidentiality, this work could not be integrated into this manuscript.

[2007] Laurence Boudet

Qualification automatique de modèles 3D de bâtiments à partir d’images aériennes haute résolution

[march 2006] Roger Trias Sanz

[pdf] Semi-automatic high-resolution rural land cover classification

[nov 2006] W. Touhami

Identification et classification automatique de régions d’intérêt dans des images tomographiques : application aux kystes du rein

[dec 2005] Seriy Kosinov

Machine Learning Approach to Semantic Augmentation of Multimedia Documents for Efficient Access and Retrieval

[june 2005] Nesrine Chehata

Modélisation 3D de scènes urbaines à partir d’images satellitaires à très haute résolution

[may 2005] Greet Frederix

Beyond Gaussian Mixture Models: Unsupervised Learning with applications to Image Analysis